As far as transport protocols go, TCP is the most used today, and when it comes to TCP implementations, there are as many TCP stacks as there are networked operating systems. This includes everything from Windows PCs to Android Phones and Cisco routers. In most cases, an update to TCP requires a firmware change or OS update - and all endpoints, routers and network appliances may need to deploy a feature for it to work.

Not the case with QUIC, an encrypted transport layer protocol which runs on top of UDP. As QUIC implementations can live in userspace, development can happen separate from the OS. This means control over transport features shifts away from network vendors and operating system implementors to application service providers - enterprises who run the web, like Google and Facebook.

The IETF standards for HTTP/3 and QUIC version 1 are about to be published by the IETF, and the software ecosystem to go with them is still emerging. Many implementations are still under development, and while some provide servers and clients for the QUIC transport, there are very few production-ready out-of-the-box HTTP/3 servers.

Serving a website using HTTP3/QUIC needs:

It’s March 2021 at the time of writing. There are several open-source implementations of QUIC, in various stages of readiness. Here’s a diagram of all the QUICs, their TLS library dependencies, and who implements and uses them. Those who run their variant in production are highlighted in orange:

This diagram is not exhaustive, the IETF QUIC working group maintains its own list, including non-open-source implementations.

All the implementations rely on some sort of custom TLS library: the most popular appears to be BoringSSL, Google’s fork of OpenSSL. Other implementations take the approach of developing their own TLS 1.3 libraries (like PicoTLS), which depend on OpenSSL. It doesn’t look like gnuTLS is making an appearance.

Google have been using QUIC in production for a few years (and have switched to IETF QUIC), unsurprisingly as they originated the protocol.

Facebook allegedly use QUIC for more than 75% of their traffic.

Fastly and Cloudflare now allow their customers to enable QUIC for their websites.

Akamai have been using their own implementation since 2016.

Client-side, there is support for QUIC in major browsers, including Firefox nightly since last year - although it’s not enabled by default.

With so many implementations, perhaps you’re wondering if they all talk to each other? Well, the QUIC Working Group also runs interoperability testing of the various implementations.

If you’re not a big corporation and want to serve your website over QUIC, then right now you have a few options:

To run an HTTP/3 server out of the box on any OS, first the custom TLS libraries need to make their way into their respective distribution.

On top of this, webservers like Apache and NGINX also need to integrate support for QUIC/HTTP3 in a main release.

Any other QUIC and HTTP/3 implementations would also need to be packaged for major server distributions.

In my experience with Debian packaging, this is only likely to happen after the software is mature enough, by which point TLS dependencies will have already been packaged.

For now, everything needs to be built from source.

You can of course host your website with Cloudflare or Fastly and simply enable QUIC - but where’s the fun in that?

I’ve deployed nginx-quic on a Raspberry Pi.

This was made more complicated due to hitting a bug in gcc 8.3 when building BoringSSL and needing to upgrade the Pi from buster to bullseye, the soon-to-be Debian Stable. After upgrading, BoringSSL built as per instructions in their repo.

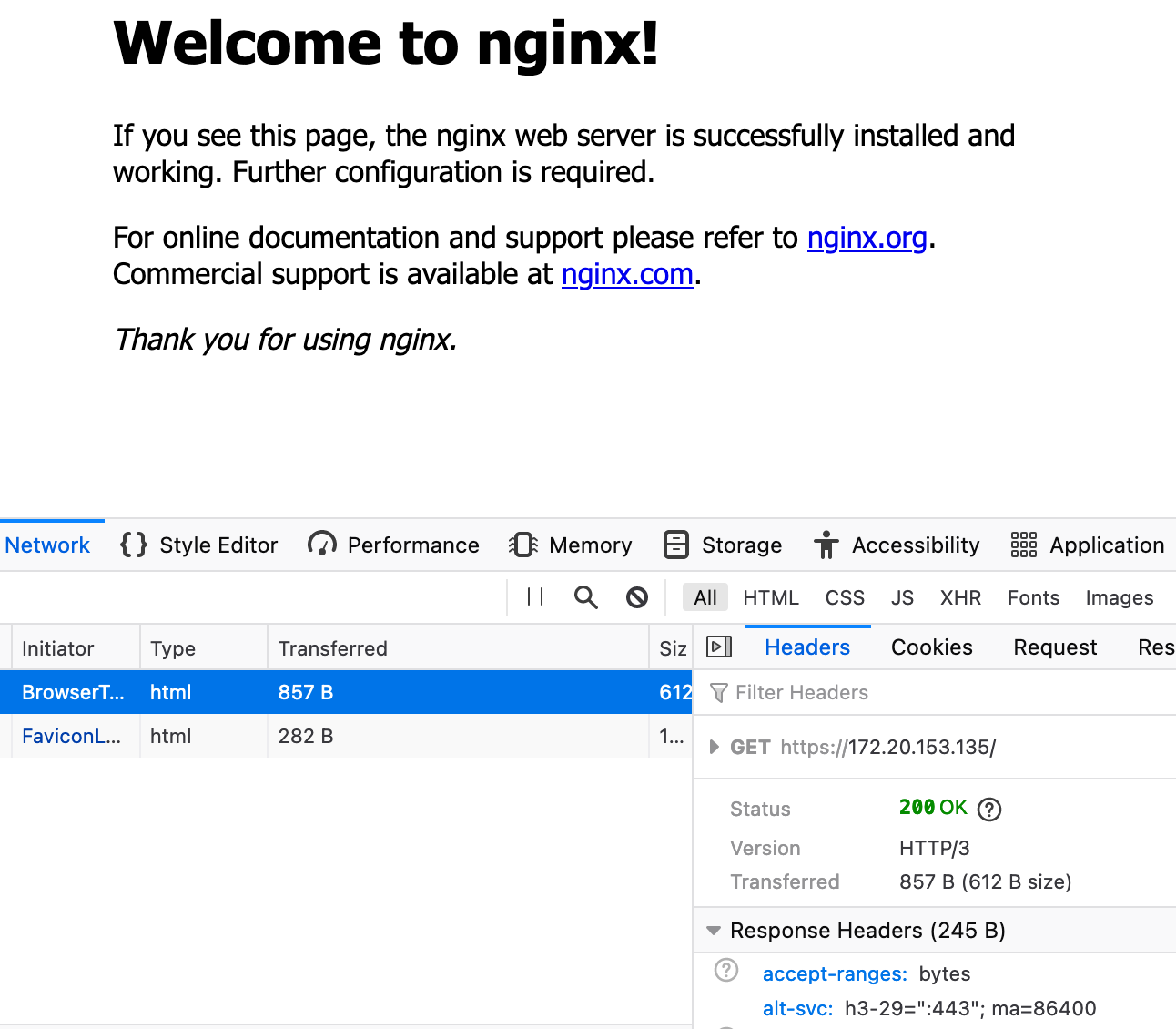

Nginx-quic provides a guide for building and configuring the server. I configured the server as per instructions, and tested it with Firefox versions 78 and 85, and Chrome version 88 on both Linux and MacOS.

The mechanism to signal a client the presence of an HTTP/3 server is the use of the alt-svc header, which tells the client which QUIC versions are support and which port to use.

In theory, the second time a resource is requested it will use HTTP/3.

For my server, this worked… eventually, using version 85, after much page reloading, cache clearing and browser restarting. The logs show about 20 HTTP/1.1 attempts until HTTP/3 was finally used.

I have not managed to get Firefox version 78 and the latest Chrome to do the same, despite enabling QUIC support.

On one hand, controlling software at both endpoints of a network path (like Google controls its own servers and your browser!) means innovation in the QUIC transport can happen really fast.

But right now, each of my browsers comes with a different implementation of QUIC. If I want cURL with HTTP/3 support I’ll likely need to install yet another QUIC library. What happens when more applications use it? All this seems wasteful, if you consider all applications on an endpoint share a TCP stack.

board processor RAM jtag test images? qemu machine guide spec

raspberry pi, pi 0 1GHz ARM1176JZF-S 512MB https://www.cl.cam.ac.uk/projects/raspberrypi/tutorials/os/ https://www.raspberrypi.org/products/raspberry-pi-zero/?resellerType=home pi 2 900MHz quad-core ARM Cortex-A7 CPU Cortex-A7 1GB raspi2 https://www.raspberrypi.org/products/raspberry-pi-2-model-b/?resellerType=home beaglebone black, pocket beagle AM335x 1GHz ARM® Cortex-A8 512MB https://wiki.osdev.org/ARM_Beagleboard https://beagleboard.org/black nano pi neo lts Allwinner H3, Quad-core Cortex-A7 256MB/512MB https://www.friendlyarm.com/index.php?route=product/product&product_id=132 iMX233-OLinuXino-MINI iMX233 ARM926J processor at 454Mhz 64MB https://www.olimex.com/Products/OLinuXino/iMX233/iMX233-OLinuXino-MINI/open-source-hardware liche nano Allwinner F1C100s, ARM 926EJS 32MB https://www.seeedstudio.com/Sipeed-Lichee-Nano-Linux-Development-Board-16M-Flash-WiFi-Version-p-2893.html

https://www.twosixlabs.com/running-a-baremetal-beaglebone-black-part-1/ https://github.com/allexoll/BBB-BareMetal https://github.com/auselen/down-to-the-bone

https://octavosystems.com/app_notes/bare-metal-on-osd335x-using-u-boot/

[I enjoyed using][1] mdp to write slides, being able to hammer in markdown

gave a satisfying sense of flow and I felt like I was able to get the slides

out of my head in a straightforward manner. But I knew for my

eurobsdcon presentation I was going to have to include photos of equipment

and maybe even demo videos.

Shelling out to vlc or feh for pictures and video wouldn't do, it would throw

off both me and the audience. That ruled out using mdp for making slides and

it also ruled out using sent from suckless

I canvassed around on mastodon and tried out a bunch of other tools, the main factor in ruling out most of the tools was there handling of very long titles. Something I couldn't avoid when the title of my talk was 84 charactars.

remarkjs was the tool I settled on.

remarkjs can take slides either as an external markdown file if you have a

way to serve them to the js, or embedded into a html file. I ended up embedded

the slides into the markdown as this was the fastest way to get from nothing to

having some slides appearing. remarkjs has a boat of documentation, which I

thourghouly ignored until after the presentation, in fact in the days after

when I was toying with implementing a presentation view I found remarkjs

already has one built in!

remarkjs was great for authoring into, the ability to add style to documents

was a big bonus for me too. The fact there was style did mean I had to write

some css to get videos into the right place in the slide was annoying, but it

worked out well.

My mdp slides included diagrams as most slide decks do, I wanted to add

diagrams to this slide deck. The mdp diagrams are just ASCII art, showing

ASCII art in a web page is fine, that is show I made a sharable version of the page, but I felt I could do better.

goat can render ascii art diagrams in a restricted set into svg diagrams.

example example example

Gives an svg diagram like:

svg

The svg output is very verbode and really not something you would want to embed in the middle of a slide deck.

svg quoted cut off

For this to be managable I wrote a python script to 'render' the document. The script searches the input for lines starting with 'diagram:' and takes the remainder of the line as a file name to render and substitute.

import sys

import subprocess

filename = sys.argv[1]

infile = open(filename, 'r')

outfile = open('out.html', 'w')

cmd = "cat"

cmd = "goat"

for l in infile:

if l.startswith('diagram:'):

if len(l.split(' ')) != 2:

print('bad line {}'.format(l))

diagram = 'diagrams/{}'.format(l.split(' ')[1].strip())

result = subprocess.run([cmd, diagram], stdout=subprocess.PIPE, encoding='utf-8')

if result.returncode == 0:

count = 0

outfile.write('.center[\n')

for o in result.stdout.split('\n'):

# print(' ' + o)

outfile.write(o + '\n')

outfile.write(']\n')

else:

for o in result.stdout:

print(o, end='')

outfile.write(l)

else:

outfile.write(l)

infile.close()

outfile.close()

I was happy enough using remarkjs that I was considering adding a

presentation mode. However there are some downsides, firefox really struggled

when rendering slides, when I had 40MB mp4 video files firefox would peg all

cpus, as the slides were just a page the autoplaying video pulled firefox down

all the time.

remarkjs "supports" exporting to pdf via chromes print preview, but all I

could get chrome to do was hang. Someone else managed to get an export from

safari, overall not the best.

[1]: mdp post

Here’s a guide to my lazy setup for running multiple Firefox tabs in the same session over different networks using the magic of SOCKS.

The use-case is that I sometimes want to access a web app or page which is only accessible via a specific network (i.e., my work network or Tor), but I most definitely don’t want the rest of my browsing traffic going through there.

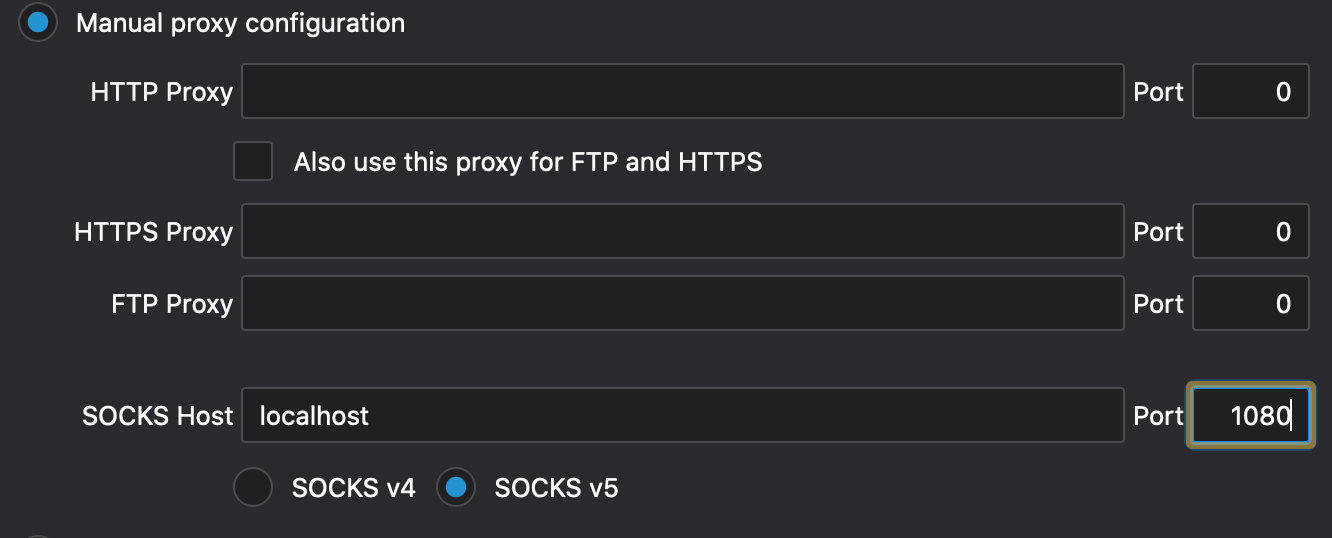

The general idea is to use long-running SSH tunnels to provide one or more SOCKS5 proxies that can be used by Firefox (or your browser of choice). SOCKS is a protocol that allows applications to request connections through a proxy server. Applications, such as Firefox, must be configured to use it.

Generally, to do this manually, you’d first SSH with dynamic forwarding into a host on the desired network:

ssh -D1080 user@host

…and now a SOCKS proxy on localhost port 1080 is ready to forward connections to the remote host. Tor also provides a SOCKS proxy that can be used in much the same way by default on port 9050. This does not conflict with Tor Browser, which runs its own Tor daemon listening on port 9051, separate from the system Tor.

So, to use the proxy in a browser, the browser’s network settings should be changed to resemble something like this:

Firefox also has a checkbox for proxying DNS requests through the same connection.

It’s a good idea to proxy your DNS requests because 1) the remote DNS resolver might know names of resources you can’t access locally and 2) due to the prevalence of CDNs in the Internet, the IP addresses obtained this way will often correspond to servers physically closer to the tunnel endpoint, speeding up connections.

These settings could be saved under a separate Firefox profile that can be fired up whenever the SSH connection is active. Any browser requests will be forwarded to the network of the host you’re SSHed into.

Now, this is an easy substitute for a VPN, but still requires launching a new SSH connection and browser instance every time you want to browse via the remote network. Plus, multiple networks mean multiple profiles or multiple SSH connections which is a pain to manage.

Enter Firefox containers and autossh. The first is an extension that allows you to keep website data, cookies, and cache separate between tabs and websites by assigning them to different containers.

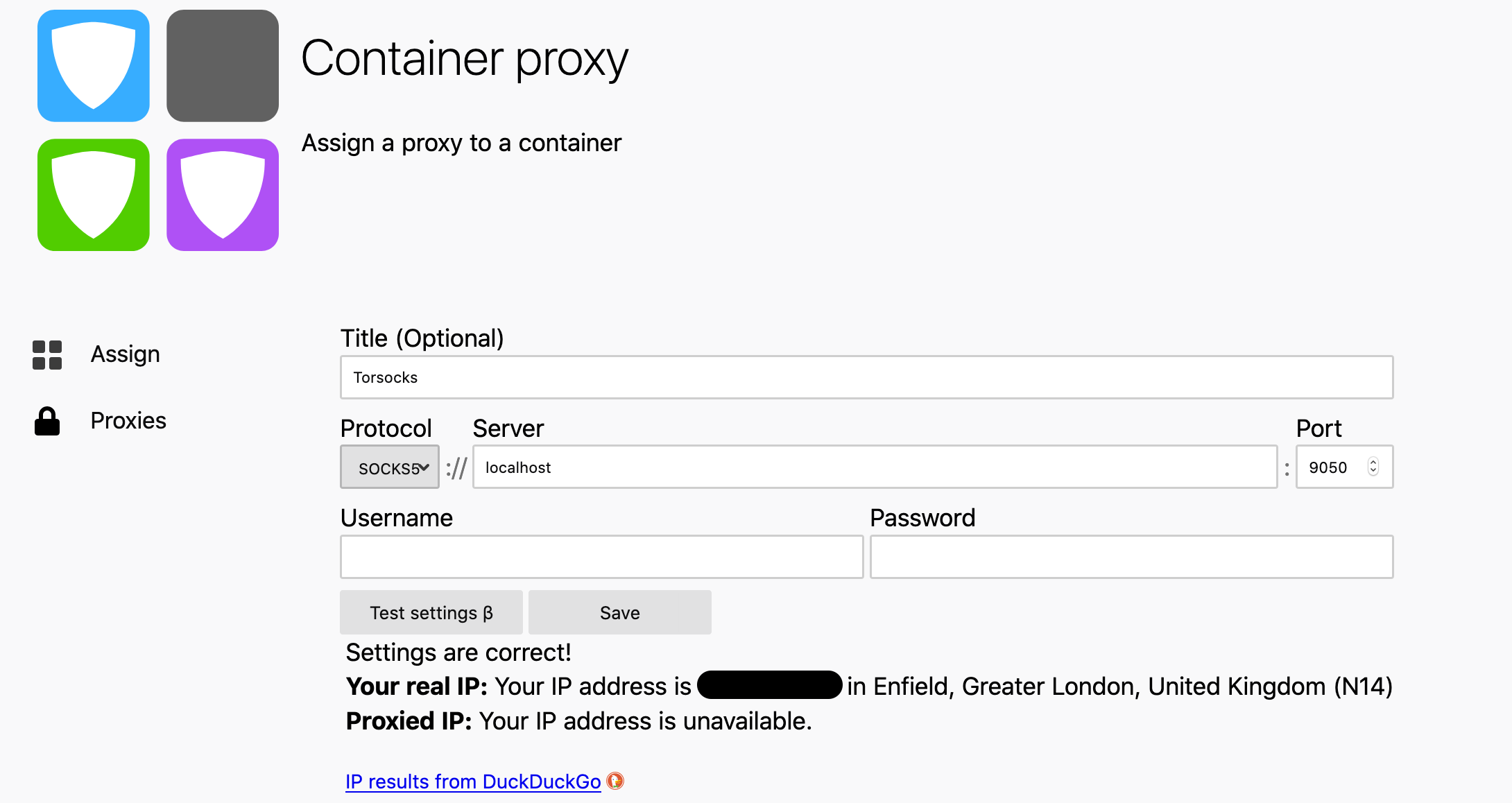

The second is a way to maintain an SSH tunnel indefinitely. The way to glue them together is Container Proxy, another Firefox extension that allows per-container proxy settings.

Here’s how it works:

Autossh and TorThis is a wrapper around ssh to keep tunnels open indefinitely in the background. It can use any SSH option or config.

For simplicity, I have the following config specified for my proxy host in ~/.ssh/config:

Host pxhost

Hostname pxhost.example.com

ServerAliveInterval 30

ServerAliveCountMax 3

DynamicForward 1080

This command will run autossh in the background, forever keeping the

connection alive.

autossh -M 0 -f -N pxhost

To persist this on reboot, I use a systemd service file for Linux and a @reboot cronjob for macOS.

I also have Tor configured to run at startup, allowing me to use it alongside other connections. Tor can run as a service on distros using systemd.

On macOS, I modified the .torrc file in my home directory to include RunAsDaemon 1, and just running tor with no options on the command line starts

the SOCKS proxy.

The extension can be found in the official Firefox store.

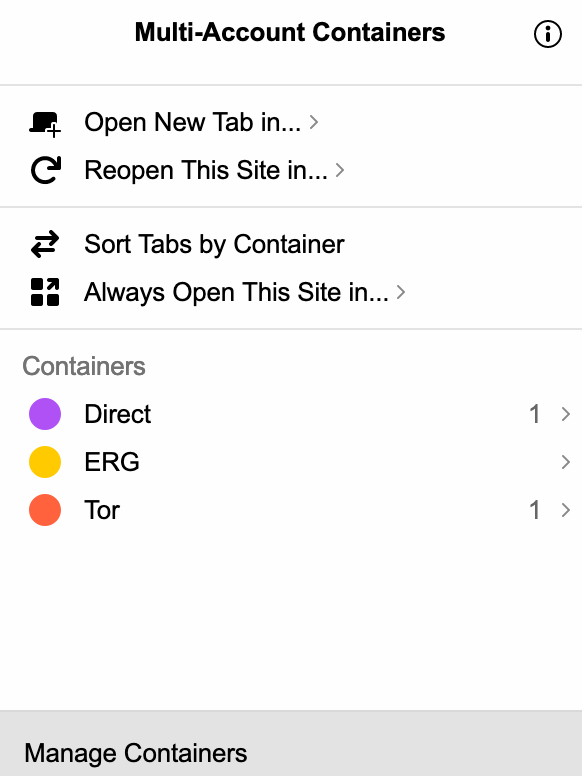

I have three containers: a Direct container for day-to-day browsing without a proxy, a Work container for accessing some infrastructure at work via an SSH connection into my work computer,

and a Tor container for looking at .onion addresses or other web pages over Tor:

Tabs opened in each container are colour coded, and easy to keep track of.

An important note about the Tor container: using Tor as a proxy and not using Tor Browser does not provide anonymity, because any other browsers will leak client information allowing 3rd parties to identify users. I do this mostly for convenience and sometimes to avoid eavesdropping from my ISP. However, if you want anonymity, USE TOR BROWSER!

Proxies for containers are not supported natively in the official Firefox extension. At the moment, another extension is required to make the containers use the tunnels. While not checked by Mozilla, this is open source and the code is auditable at https://github.com/bekh6ex/firefox-container-proxy.

It lets you configure and test the proxies with DuckDuckGo, and assign them to containers:

If the tunnels are set up to persist on reboot, and your Firefox profile is not entirely erased with the latest update, this is how it looks/works:

That’s it. Containers are cool. Use more containers, before Mozilla dies off.